Whenever computers are going to be taught or programmed to accomplish specific tasks, then there is always a need to make things easier for the machine, and this is done by ensuring that data is free from Noise or Irrelevant information, which is where the term Data Cleaning comes in. This involves using various tools, including algorithms purging useless data, and this is coupled with the hope of One-day developing models which will be able to infer missing information without having to first remove data points that have been labeled as outliers training only on perfectly rotted examples. Models perform best when they use data that is clean because such removes uncertainties and gives the business trustworthy insights. Together with the blog, we will research the relevant techniques of data cleansing, its importance, and examine the common data pitfalls and techniques that would alleviate the impact of those pitfalls.

Challenges With Data When Working With Machine Learning

Data used for machine learning purposes also have common challenges associated with them, some of those challenges include:

Missing Values

Statistically speaking, missing data can alter the quality of the machine learning models to a great extent. The gaps that arise as a result of missing data are most commonly filled through the techniques of mean, median or mode imputation. Others even more sophisticated such as the k-nearest neighbors imputation or predictive imputation estimate the missing values through data points and a machine learning model. What doing this does, is to ensure the completeness of the dataset that is ready for analysis.

Outliers

Statistics’ Z-score or Interquartile Range can be utilized in handling the extreme value. Outliers being distant from the mean can interfere with accurate and dependable forecasting by causing the data to not reflect the normal trends. Sometimes visualization tools also helps to identify outliers so that one can make a decision of whether to eliminate or to adjust them.

Inconsistent Data Formats

The data can most of the times vary in the way it is presented which can create problems during the analysis stage. One way of doing this is to use a standard format to present the data such as using a standard way of writing dates or normalizing numerical values such as currency. This would also improve the results of models devised.

Duplicate Records

Data duplication leads to incorrect analysis. In order to avoid such sad situations in future, one can use exact matching techniques, work towards removing duplicates through data matching software, eradicate duplicates altogether.

Noisy Data

Unclear data which on some occasions includes unwanted information or the data which is incorrect adds no value to the model rather decreases its quality. Such data can be cleared up with the help of noise reduction filters or outlier analysis; this way the models will be trained with relevant data only.

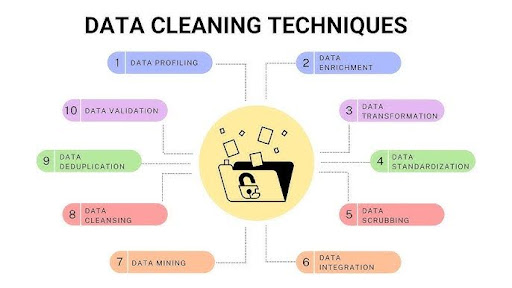

Data Cleaning Techniques

Implementing effective data cleaning techniques is important in order for the machine learning models to be credible:

Data Imputation Methods

Filling in the holes is what imputation strategies intend to accomplish. Mean, median, and mode imputation are easy solutions for simple datasets. More advanced methodologies like k-nearest neighbors, predictive imputation are suitable for bigger and more complex datasets. These techniques are important in preserving the holisticity and integrity of the information.

Dealing With Outliers

Outlier treatment can be easily accomplished with statistical means and visualization tools. By doing this models are consistent with the intended patterns without losing the needed data of individual points.

Data Transformation

One of the primary processes of data cleaning is considered to be data transformation. Scaling and normalization are ways of changing the magnitude pronunciation of numerous values to be less complicated for machine learning algorithms. It is also necessary to ensure all categorical variables are encoded correctly so that the model can make use of all types of data.

Removing Duplicate Data

Robust processes must be put in place to detect and remove duplicate records. In this case, information matching software for duplicate data detection can assist in this activity by suggesting changes based on the standard of duplicate detection. This is necessary in ensuring quality of the information and prevents the chances of constructing overly complex models which will be subject to overfitting.

Noise Reduction Techniques

The main aim behind noise reduction techniques is to get rid of unnecessary information and absorb only the information that matters the most. Considering that the end product is a machine learning model, there is a high likelihood that it is being trained on clean and relevant data that has gone through such processes.

Data Cleaning Tools and Libraries

Data cleansing in Python is largely done with the help of libraries such as Pandas, Scikit-learn and Numpy. Tasks like handling missing values, detecting outliers, and transforming data can be automated with these libraries. Tools for data profiling like pandas-profiling and data-cleaner also benefit the assessment of data quality that provides insights for data cleaning.

Best Practices for Data Cleansing

Best practices recommend that for better machine learning projects, operationalization best practices are followed for data cleansing:

-

Risk of Data: Every model is solely dependent on the data quality thus making it one of the core components that determine the success of the end result. Hence, it is good practice to glue the holes or do cleansing and balancing of outliers from the dataset.

-

Automated Data Cleansing: They say that life was so much simpler before the advent of technology. Managing large datasets and doing overtly complex tasks by hand was extremely stressful, and managing separate teams responsible for accomplishing each task only added to the chaos. What was most saddening was how approach towards data cleansing was extremely lackluster and nonchalant. It truly was one of those monotonous tasks all employees wanted to avoid at any cost and unfortunately had to do anyway.

-

Continuous Data Cleaning and Evaluation: Data should be cleaned on a regular basis, and data quality should be monitored with new data entries. Routinized cleansing of data helps in the retention of the model performance with time.

Issues related to Data Cleansing

Extraction, Transformation, and Loading (ETL) processes for data cleaning are important, but it is not all smooth sailing:

-

Handling of Bigger Data Sets: Bigger data sets make cleansing tasks relatively harder. There is a need for effective processing as well as storage solutions with interactions with large data sets.

-

Handling of Bigger Data Sets: Bigger data sets make cleansing tasks relatively harder. There is a need for effective processing as well as storage solutions with interactions with large data sets.

-

Quality Over Time: There is a constant tussle over the amount of time one spends cleansing data and the end product quality. Identifying key data problems and setting out to resolve them especially time binding ones works to achieving proper contrasts.

Conclusion

Cleansing of data is one important phase required for the machine learning preparation of data. Correcting such frequently encountered data problems and applying proper methods will allow your ml to be established on the trustworthy data. Therefore putting efforts and resources into data cleansing processes improves the model’s accuracy as well as the general outcome of machine learning initiatives.